Development of a Talking Tactile Tablet

Researchers have long understood the value of tactile presentation of pictures, maps and diagrams for readers who are blind or otherwise visually impaired (Edman, 1992). However, some practicalities have always limited the usefulness and appeal of these materials. It is often difficult for a blind individual to make sense of tactile shapes and textures without some extra information to confirm or augment what has been touched (Kennedy, Tobias & Nichols, 1991). Labeling a drawing with Braille is one way to accomplish this, but since Braille tags must be large and have plenty of blank space around them to be legible, they are not ideal for use with fairly complex or graphically rich images. Also, reliance on Braille labeling restricts the usefulness of tactile graphics to those blind or visually impaired persons who are competent Braille readers, a lamentably small population.

In order to enrich the tactile graphic experience and to allow for a broader range of users, products like NOMAD have been created. NOMAD was developed by Dr. Don Parkes of the University of New South Wales, and was first brought to market in 1989 (Parkes, 1994). When connected to a computer, NOMAD promised to enhance the tactile experience by allowing a user to view pictures, graphs, diagrams etc, and then to press on various features to hear descriptions, labels and other explanatory audio material. Further, the NOMAD aspired to offer the kind of multi-media, interactive experiences that have exploded on the scene in visual computing.

Several factors, however, have always prevented the NOMAD system from being widely adopted. Resolution of the touch-sensitive surface is low, so precise correspondence of graphic images and audio tags is difficult to achieve. Speech is synthetically produced, so it is not as clear and "user friendly" as pre-recorded human speech that is common in mainstream CD ROMs. Perhaps most importantly, high quality interactive programming and tactile media was not independently produced in sufficient quantities to justify the hardware investment, as was envisioned by the NOMAD creators.

Given the immense promise of audio-tactile strategies to open interactive learning and entertainment to those whose vision problems preclude their use of a mouse or a video monitor, Touch Graphics was established in 1997. This for-profit company, created in cooperation with Baruch College's Computer Center for Visually Impaired People, has successfully competed for research and development funds through the Small Business Innovation Research programs at the US Department of Education and the National Science Foundation. The work has led, thus far, to the creation of a prototype Talking Tactile Tablet (TTT) and three interactive programs for use with the device.

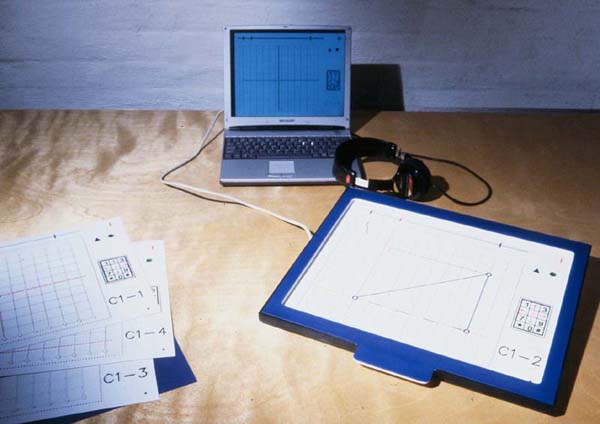

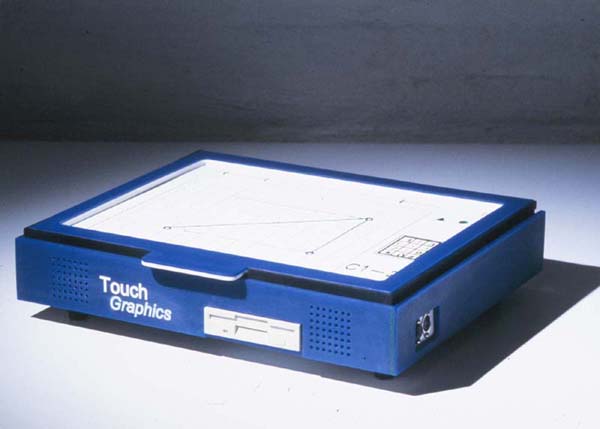

The hardware component of the TTT system is an extremely simple, durable and inexpensive "easel" (fig. 1) for holding tactile graphic sheets motionless against a high-resolution touch-sensitive surface. A user's finger pressure is transmitted through a variety of flexible tactile graphic overlays to this surface, which is a standard hardened-glass touch screen, typically used in conjunction with a video monitor for ATMs and other applications. The TTT device is connected to a host computer by a single cable that is plugged into the USB port on a Macintosh or Windows-based computer. Alternatively, a completely free-standing version (fig. 2) has been created, in which the tablet is set into a "docking station" that incorporates a Single Board Computer, a hard disk drive, audio system, and connections to peripheral devices. In both cases, the computer interprets the user's presses on the tactile graphic overlay sheet in exactly the same way that it does when a mouse is clicked while the cursor is over a particular region, icon or other object on a video screen. With appropriate software, the system promises to open the world of "point and click" computing to blind and visually impaired users.

Three software titles have been prepared for use with the Talking Tactile Tablet. Each consists of a collection of tactile overlay sheets and a CD ROM with program files. The user places the CD in the computer's drive, and starts a generic "launcher" program that is common to every existing and future application developed for the system. A human-voice narrator leads the user through the set up process, and audio confirmation is provided as each step is successfully completed. An "expert mode" is available that bypasses all but the essential instructions and audio cues; however, a first time user is presented with a complete description of the device and its features, and is led in a step-by-step fashion through the process of launching the application. For experts and beginners, the start-up sequence is as follows:

- The user opens the device's hinged frame, and lays a tactile sheet flat on the surface, and then closes the frame, which is held shut by a magnet. When the frame is shut, the tactile sheet is held motionless against the touch screen. This is crucial, since each region, icon or image on the tactile sheet corresponds to an identically sized and positioned "hot spot" on the touch screen. If the sheet moves during a session, precision will be compromised.

- He or she is then asked to press three raised dots, which appear in the corners of every tactile sheet. As each dot is pressed, the computer plays a confirming audio tone. Pressing these dots serves to calibrate the system, a step made necessary by the high resolution of the touch screen. Once the positions of the calibration dots are known to the computer, a correction factor that compensates for any discrepancy between the actual and idealized positions of the overlay is applied to every subsequent pick. Precise superimposition of the tactile shapes over the sensitized regions of the touch screen must be achieved to guarantee correspondence of objects touched to appropriate audio responses. This is especially crucial for applications that rely on complex graphic images, such as the Atlas of World Maps.

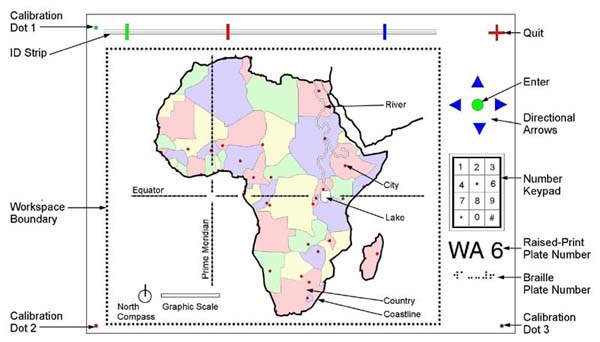

- Next, the user must identify to the computer which tactile sheet has been mounted, out of a potentially large collection. This is accomplished by asking the user to run his or her finger along a long horizontal "ID Strip" near the top of the sheet, and to press three short vertical bars as they are encountered, in sequence from left to right. The position of the three bars is unique for each sheet, and acts as a coded nametag that is intelligible to the computer. Once the third bar has been pressed, the computer speaks the application's title and sheet number, and asks the user to confirm that the sheet that is in place is the intended one. If it is, the user responds by pressing the circle button, and begins the game or lesson. The entire process of setting up the tablet takes less than 30 seconds for an experienced user.

- At appropriate moments during the session, the user is asked to place a new tactile sheet on the device, after which the calibration and identification routine, described above, is repeated. This process continues until the user decides to end the session, by pressing the plus-shaped "quit" button in the upper right corner, and the system shuts down until next time.

Touch Graphics has produced the following applications for use with the Talking Tactile Tablet:

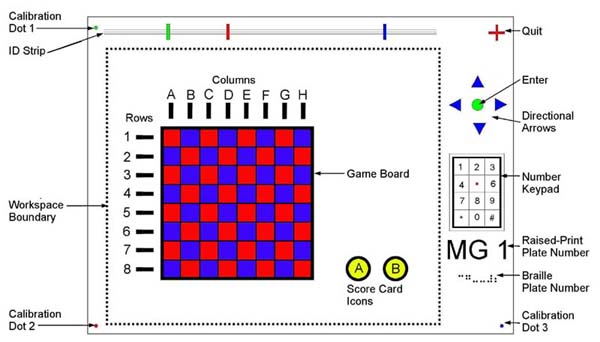

- The Match Game. A tactile/audio memory game (fig. 3) that allows children to compete with one another or to play alone, as they search for matching pairs of animal sounds hidden in a grid of boxes. The game provides entertainment and intellectual challenge in a setting where visual impairment does not put the player at a disadvantage. Playing the Match Game may also help to develop spatial imaging skills, since the successful player must build and maintain a mental picture of the placement of hidden sounds around the playing board. The computer randomly reassigns the animal sounds to the squares in the grid each time the game is played. Three skill settings are possible, and the winner of each board gets to earn extra points playing a "bonus round."

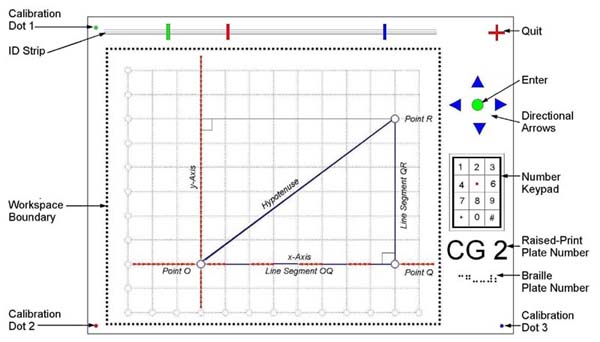

- The first unit in a one-year curriculum on Pre-Calculus. One of the most difficult challenges for a blind student who desires to enter a technical or scientific profession is mastery of "spatial" math, such as Geometry, Trigonometry and Graphing. Since math education is cumulative, it is almost impossible to succeed at Calculus (needed for college-level studies in Engineering and most Sciences) without a good grounding in these foundation areas. Through the use of audio-tactile computing, it becomes possible to describe concepts that must be mastered before going forward in math. The curriculum (fig. 4) contains lessons that the user can move through at his or her own pace, and interactive examples that allow the user to plug in values and then to listen as the computer solves problems in a step-by-step fashion. Topics covered include: the coordinate grid, distance formula, slope, and equations of lines. At the end of each section, a set of exercises allows the student to demonstrate mastery of the material before going forward to the next section, or back to review. This curriculum is based on work done by Dr. Albert Blank of the College of Staten Island, in collaboration with the Baruch Center, under funding from the National Science Foundation.

- A Talking Tactile Atlas of World Maps. Developed in collaboration with National Geographic Maps Division, this Atlas (fig. 5) includes one world map and seven continent map plates. The maps show coastlines and national boundaries, capital cities and major bodies of water. The Atlas has five operational modes. The user can explore at will by touching the tactile maps, to hear names of places touched. He or she can also interact with the system, to select a destination by name from an alphabetical index, and listen to the narrator's coaching ("go southÖgo westÖgo southÖgo eastÖyou've found Cairo!"), as his or her finger closes in on the requested place. Other modes include a way to query the system for distance between any two points and an almanac of useful information and audio clips of spoken languages from every country. An audio version of a topographical map uses a series of graduated pitches to depict relative elevations above sea level on the continent map plates.

Additional applications for the TTT system are under consideration now, including:

- Web page interpreter. To improve access to the Internet through an audio/tactile browser;

- Adventure game. To build sound-localization skills through the use of spatially accurate three-dimensional binaural audio, as players move through a virtual environment;

- Spreadsheet builder and reader. To improve access to Microsoft Excel using an audio/tactile representation of alphanumeric grids.

- Standardized math assessment delivery system. To improve access for blind and visually impaired students in high-stakes testing

- Voting device. To allow blind individuals to independently (and privately) register choices for elected officials;

- Authoring system. To allow teachers to create their own audio-tagged tactile drawings.

With the TTT System, the developers aspire to promulgate a uniform strategy for tactile graphic-based interactive multimedia applications. We are confident that the low cost of the system and the range of professionally-produced software titles already available will demonstrate to interested individuals, schools, libraries and community facilities that an investment in the hardware component is justified [1]. As we create a critical mass of professionally produced audio-tactile media, we expect to assure customers that future applications, conforming to the same standards, will become available to augment their collections. This is the same strategy that has proven successful in mainstream computing, such as in the emergence of the Windows Graphical User Interface as a global standard.

The developers look forward to a time when blind and visually impaired people have the same opportunities for education, entertainment, and camaraderie that the sighted world enjoys through the introduction of accessible computerized multimedia materials.

FIGURES

Fig. 1: The Talking Tactile Tablet, shown connected to a host notebook computer, with an assortment of Tactile Overlays. The device is about 15 inches wide, 12 inches deep and 0.5 inches thick. A wire emerges from the left side of the unit, and there is a tab for lifting the hinged frame along the front edge.

Fig. 2: The Talking Tactile Computer with Docking Station. The same as fig 1, except the device rests on a rectangular unit, 16 inches wide, 13 inches deep and 2.5 inches high, with a floppy disk drive and stereo speakers on the front edge, and plugs for peripheral devices on the right side.

Fig. 3: The Match Game overlay with labels showing the functions of each element. The graphic shows the standard Tactile Graphic User interface, which includes the three set up dots in the corners; the ID Strip running along the top edge, the control buttons in the upper right corner and a numerical keypad along the right side. Within the dotted line box of the "workspace" are the Match Game board and icons that can be pressed to hear the players' scores.

Fig. 4: A sample plate from the coordinate geometry curriculum, labels showing functions of each element. The standard Tactile Graphic User Interface is identical to that described for the Match Game (see Fig. 3, above). Within the dotted box of the "workspace" is the coordinate grid, with bumpy lines showing the x and y axes. A triangle is represented to demonstrate the Pythagorean Theorem. Each vertex of the triangle is labeled, as are the individual line segments.

Fig. 5: The "Africa" continent plate from the "Talking Tactile Atlas of World Maps" with explanatory labels.

NOTES

[1] Projected cost of the TTT, is $400. Applications, depending on complexity and number of tactile overlays included, will cost between $100 and $200 each.

REFERENCES

Parkes, D. (1994). Audio tactile systems for designing and learning complex environments as a vision impaired person: Static and dynamic spatial information access. In J. Steele and J. G. Hedberg (eds), Learning Environment Technology: Selected papers from LETA 94, 219-223. Canberra: AJET Publications.

Kennedy, John, Gabias, Paul & Nicholls, Andrea. Tactile Pictures. In M. Heller and W. Schiff (eds), The Psychology of Touch, Lawrence Erlbaum Associates, New Jersey. 1991

Edman, Polly K. Tactile Graphics. New York: American Foundation for the Blind. 1991.